The meaning of legal rules -- such as rules of evidence -- depends only in part, but in essential part on how such rules work. To wit: You do not know the meaning of a legal rule of evidence unless and until you know how the rule works and the consequences that the rule (in its environment) produces. Given this, law professors should be devoting massive amounts of energy to study of scholarship in artificial intelligence a/k/a computational intelligence.

I reiterate this preachy conclusion only because a recent UAI list call for papers [CFP] caught my eye and because this CFP, like many other UAI CFPs, reminded me of how little we law teachers (and, very probably, law and economics scholars) know about the meaning and workings of legal rules. [UAI is the acronym for "Uncertainty in Artificial Intelligence."] Take a gander at these extracts from a CFP for a forthcoming (May 8 & 9, 2006) program of CLIMA VII, Seventh International Workshop on Computational Logic in Multi-Agent Systems, at Future University, Hakodate, Japan, http://www.fun.ac.jp/aamas2006/:

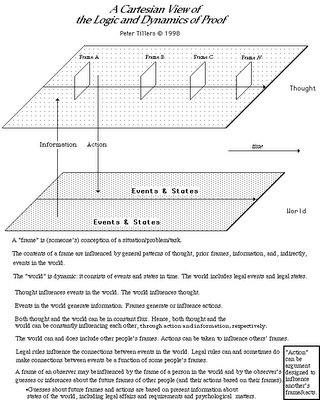

Multi-Agent Systems are communities of problem-solving entities that can perceive and act upon their environment to achieve their individual goals as well as joint goals. ...The process of litigation and proof involves "multiple agents", such agents (lawyers, clients, judges, other actors) are "problem-solving entities", such agents "can perceive and act upon their environment", they can act to "achieve ... individual as well as joint goals."Computational logic provides a well-defined, general, and rigorous framework for studying syntax, semantics and procedures for various tasks by individual agents, as well as interaction amongst agents in multi-agent systems ....

Consider for a moment some of kinds of methods that are used to try to decipher multi-agent systems:

* logical foundations of (multi-)agent systemsDoes the above list of topics suggest that legal scholars have something to learn from their brothers and sisters in computational intelligence and allied fields?

* extensions of logic programming for (multi-)agent systems

* modal logic approaches to (multi-)agent systems

* logic-based programming languages for (multi-)agent systems

* non-monotonic reasoning in (multi-)agent systems

* decision theory for (multi-)agent systems

* agent and multi-agent hypothetical reasoning and learning

* theory and practice of argumentation for agent reasoning and interaction

* knowledge and belief representation and updates in (multi-)agent systems

* operational semantics and execution agent models

* model checking algorithms, tools, and applications for (multi-) agent logics

* semantics of interaction and agent communication languages

* distributed constraint satisfaction in multi-agent systems

* temporal reasoning for (multi-)agent systems

* distributed theorem proving for multi-agent systems

* logic-based implementations of (multi-)agent systems

* specification and verification of formal properties of (multi-) agent systems

Interdisciplinary work is hard. Interdisciplinary work is humbling. And interdisciplinary work demands humility. I often think that law teachers would do more and better interdisciplinary work if they (law teachers) were not so determined to show how smart they are. There are many intelligent people on earth, and many of them are in disciplines other than law, and some of those people know things law teachers don't. I have found that confessing ignorance while approaching smart people in other disciplines is a fruitful strategy. Your colleagues in foreign disciplines will be flattered by your interest and they will often take the time to explain things to you. Oh yes, remember this: you know some things they don't know, and you're not as stupid as you may sometimes feel you are.

BTW, some law & economics literature seems determined to talk about decision but not about inference. Isn't this is a fundamental mistake? If some of the actors or agents in the environment that one wishes to understand and explain have inferential mechanisms or processes in their souls, isn't it clear that the behavior of such agents ordinarily cannot be predicted well if little or no account is taken of the agents' inferential processes? (And please note, you law & economics folk: those inferential mechanism and processes are extraordinarily complicated, and simplifying such processes "for the sake of argument" is often unjustified.)

No comments:

Post a Comment